blog 2021 10 min read

In conversation with Michael Ralla, VFX Supervisor at Framestore

Following his first in-depth LED virtual production project, Michael Ralla, VFX Supervisor at Framestore in LA, sat down with us to chat about his experience using disguise in film, the future of the industry and the new opportunities for collaboration across production departments.

Tell us about how you were first introduced to disguise.

At first, I didn't really understand what disguise was! There was a Katy Perry performance that disguise Chief Commercial Officer Tom Rockhill posted on LinkedIn, and at that point I’d already been doing a lot of plate-based LED shoots with Quantum of Solace Director Marc Forster. I knew there was a real-time engine involved, but I wasn’t exactly clear on the role of disguise within that entire ecosystem.

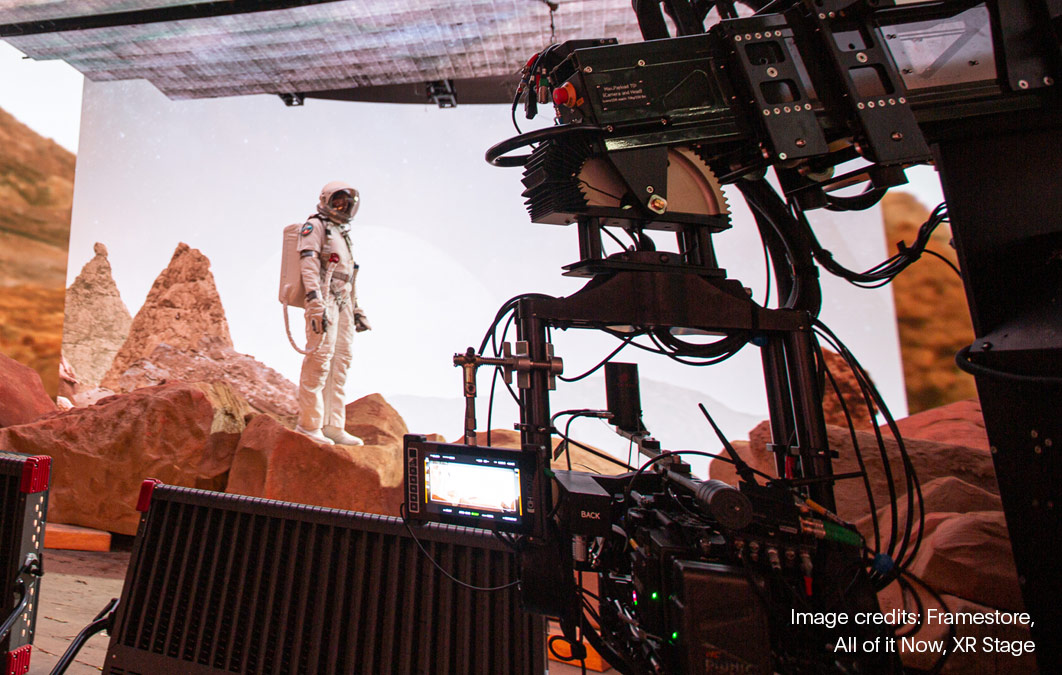

So Marcus Bengston, disguise Technical Solutions Specialist, and I had a call and got into the nitty-gritty of xR, which made me want to put a test shoot together with an actual cinematic narrative, and the objective to photorealistically and seamlessly marry a practical set with a virtual set extension. We ended up shooting a piece called Blink at XR Stage at the end of last year, and disguise was a big part of that.

Can you tell us a little bit more about your role on the Blink shoot?

I conceptualised the whole project, wrote the script and then co-directed it with a friend of mine who is a partner in Marc Forster’s production company Rakish — Preston Garrett. We also had live-action producer Lee Trask on board — she focuses almost solely on LED shoots now, has become very passionate about the medium and is probably one of the most knowledgeable producers out there when it comes to LED tech. There are only a handful of people in the industry who really understand what goes into shooting a live-action commercial on an LED stage and Lee is one of them.

Coming from the digital world, we built the virtual environments first, then the live-action pieces had to be shoehorned into those virtual environments, whereas normally you would approach it from the other end and the production designer creates a series of concepts, then you take those and extend the real world into the digital world.

That process poses some really interesting challenges and questions. For instance, how do you communicate colours from the virtual world into the real world?

So would you say that disguise gave you a little bit more creative control?

Oh, absolutely. Having the tools at your fingertips to virtually bring your vision to life at a very little cost, then show people what you’ve created without having to throw it away, but instead have an end-to-end workflow where assets can be placed on the LED wall and shot directly — definitely gives you a lot more creative control.

With this process, there's no linear succession of defined phases anymore. Everything just flows together in parallel, and what comes with that is a lot of collaboration.

Why did you decide to use disguise over building something bespoke?

After some research, disguise turned out to be the most reliable solution to get our content on the wall with the lowest possible latency and the highest possible reliability. And especially with the support that we were getting from the disguise team, it was clearly the solution that gave me the most peace of mind at the time. I like the fact that there is a clear division between content generation in-engine, and the tech to get it on the wall. There’s a huge psychological impact if the wall goes down for even a short time, and in a real production situation, I’d rather pay upfront for a rock-solid solution so that the associated cost can be factored in from the start, instead of having to pay extra for crew waiting around while the wall is black.

I can't stress enough how reassuring it was to know that the whole interface, from the Unreal Engine component to the wall, was taken care of. It was absolutely bulletproof, and the fact we could easily switch to high-resolution footage playback was very important to me, as we had one setup that features 16k x 8k 360° skydiving footage captured by Joe Jennings during a real skydive.

"disguise turned out to be the most reliable solution to get our content on the wall with the lowest possible latency and the highest possible reliability."

Michael Ralla, VFX Supervisor at Framestore

Genlocking camera and wall is fully taking care of, and the hardware is optimised for a smooth workflow. The disguise software also provides solutions for geometric wall calibration, colour calibration, and lens calibration — all important aspects, in particular when it comes to Moiree avoidance and LED line glitches. A common approach to minimise visual LED wall artefacts is to shoot large format sensors, and with vintage anamorphic lenses, which boosts the shallow depth of field and adds interesting optical byproducts, such as distortion, glows, aberrations and flares. Generally, I noticed that Moiree patterns are the first and most obvious things my VFX industry colleagues worry about when they learn of LED shooting for the first time. In practice, I found that a lot less of an issue.

A lot more critical is the lighting and colour science aspect. Most fine pitch LED panels have narrow-band primaries with clearly visible spikes across the whole spectrum — if they are the only lighting source, the colours seem to react slightly more granular in the grade, they “tip” over with a noticeable shift. Once additional light sources with a broader spectrum are introduced, a much smoother response is noticeable — similar to a perceived, “wider” gamut.

Having said that, once practical lighting enters the volume, no matter if for technical and/or artistic reasons, it is extremely easy to run into the danger of “flashing” the black levels of the wall. The visual result is a drastic loss of contrast, with the lowest blacks getting clamped into dark, flat patches without any definition or detail. It is very important to flag off those fixtures as much as possible for contamination and spill.

Last, but not least comes the colourspace aspect — which is often an area of black magic, but it can be the difference between making or breaking a shoot. Generally, LED content, no matter if real-time or plate-based, should rarely ever be creatively graded - the intent should be to mimic the real world as much as possible, including dynamic range. An ACES workflow gets you there halfway, but it is key to use PQ encoding to make use of the full dynamic range the LED panels currently can offer - and calibrate the whole image pipeline from the source image to final camera output on screen to be as accurate as possible.

Learn more about our ACES colour management features in our latest e-book

What advice would you give folks that are looking at similar virtual production jobs coming in the future?

The good thing about virtual production projects is that you can do a lot of prep work at home. Unreal Engine is free, and there is a lot of really good learning material available. Once you hit the wall with your content and film it through the lens of a camera, you're getting all the beautiful artefacts for free and there's no arguing about what it would look like in-camera, because you are capturing it all in that moment. Having said that, it really takes a good eye on stage as well to dial in the lighting, exposure levels and the contrast of the virtual content; simply shooting on an LED stage doesn't mean you're going to get a photorealistic result. There is also a big difference between what looks photorealistic to the eye vs. what looks “real”, or rather, cinematic, through the lens of the camera.

Learn more about our virtual production courses in our e-learning platform

The whole process is a huge collaboration, essentially we are creating set extension VFX shots in-camera, and as a VFX artist you still have to work with the image to make it look photoreal — but without the many iterations over a longer period of time that you would have with traditional bluescreen shots. You are on the spot and have to commit to a decision that needs to be made within minutes. The advantage, at that moment, is that you are not making those decisions alone, as you have the DP, Director and Production Designer right next to you, and can tackle certain issues synergistically.

How would you compare working with LED volumes versus green screen?

The advantages of accuracy with the LED screen are clear. And everyone sees it in real-time (the Director, the talent) so you don't have to imagine, “this monster is fighting me and now I have to point my sword at this imaginary thing” — you can react to things at the moment.

The other, quite obvious aspect is the interactive lighting and reflection component.

What do you see as the future for virtual production?

To me, it's another tool in the toolbox — an extremely powerful tool. It lends itself to projects where you have very little time and you need to shoot in a variety of different locations.

Let's say you want to shoot Death Valley, Stonehenge and Florence, but you only have one day. You may be able to send the splinter groups to capture them, but you have a high-paid actor, let's say Brad Pitt, and you have one day to shoot all of these environments with him. With an LED approach, you can bring them all virtually onto the stage. That's what it's perfect for. Another perfect case scenario is re-shoots, especially if you have reconstructions of the original sets.

We’re now getting to a point where all the tools are starting to work and we’re learning how to get really good results. It’s not for everyone, there are strengths and weaknesses, and you really have to know what those are in order to use the technology successfully.

That's why I think it's so important that people who own xR stages are happy to lend them to people who want to play with them. It makes sense to give teams the opportunity to train themselves and learn the tools.

Do you think virtual production might create new job roles?

Absolutely. There's a need for people who know how to create real-time photorealistic content, which is really hard to come by. So now, we’re seeing existing talent who already know how to create photorealistic content being trained in real-time in-house. I think we’ll also start to see a lot of new roles dedicated to in-camera VFX and virtual production.

People are now more hungry than ever for good content, especially since the pandemic when live events have been cancelled. All of a sudden, we realise we can use tools from live events to actually produce content for films and television series. I'm really curious about what's going to happen once live events resume… I do think it's all meant to coexist and we will see some really good examples of overlaps between physical and virtual events.

Download the latest version of disguise Designer to start building your best story